On Monday the 24th of June, 2019, Marten Postma defended his Ph.D. thesis “The Meaning Of Word Sense Disambiguation Research”! He will continue working as a PostDoc at the CLTL-lab on the new NWO-project “Framing Situations in the Dutch Language”

Category Archives: Project: Understanding Language by Machines (ULM)

Accepted Papers and Workshop @ COLING 2018, New Mexico, USA

Accepted Papers @ the International Conference on Computational Linguistics (COLING 2018), Santa Fe, New Mexico, USA, August 20-25, 2018:

- Measuring the Diversity of Automatic Image Descriptions – Emiel van Miltenburg, Desmond Elliott and Piek Vossen.

- Systematic Study of Long Tail Phenomena in Entity Linking – Filip Ilievski, Piek Vossen and Stefan Schlobach.

- A Deep Dive into Word Sense Disambiguation with LSTM – Minh Le, Marten Postma, Jacopo Urbani and Piek Vossen.

- Scoring and Classifying Implicit Positive Interpretations: A Challenge of Class Imbalance – Chantal van Son, Roser Morante, Lora Aroyo and Piek Vossen.

- DIDEC: The Dutch Image Description and Eye-tracking Corpus – Emiel van Miltenburg, Ákos Kádár, Ruud Koolen and Emiel Krahmer

- Piek Vossen: co-organizer of the Events and Stories in the News 2018 workshop

- Piek Vossen: member of the program committee of ISA-14: Fourteenth Joint ACL-ISO Workshop on Interoperable Semantic Annotation

- Piek Vossen: member of the program committee of COLING2018

Minh Le and Antske Fokkens’ long paper accepted for EACL 2017

Title: Tackling Error Propagation through Reinforcement Learning: A Case of Greedy Dependency Parsing

Conference: EACL 2017 (European Chapter of the Association for Computational Linguistics), at Valencia, 3-7 April 2017.

Tackling Error Propagation through Reinforcement Learning: A Case of Greedy Dependency Parsing by Minh Le and Antske Fokkens

Tackling Error Propagation through Reinforcement Learning: A Case of Greedy Dependency Parsing by Minh Le and Antske Fokkens

Abstract:

Error propagation is a common problem in NLP. Reinforcement learning explores erroneous states during training and can therefore be more robust when mistakes are made early in a process. In this paper, we apply reinforcement learning to greedy dependency parsing which is known to suffer from error propagation. Reinforcement learning improves accuracy of both labeled and unlabeled dependencies of the Stanford Neural Dependency Parser, a high performance greedy parser, while maintaining its efficiency. We investigate the portion of errors which are the result of error propagation and confirm that reinforcement learning reduces the occurrence of error propagation.

Papers accepted at COLING 2016

Two papers from our group have been accepted at the 26th International Conference on Computational Linguistics COLING 2016, at Osaka, Japan, from 11th to 16th December 2016.

Semantic overfitting: what ‘world’ do we consider when evaluating disambiguation of text? by Filip Ilievski, Marten Postma and Piek Vossen

Abstract

Semantic text processing faces the challenge of defining the relation between lexical expressions and the world to which they make reference within a period of time. It is unclear whether the current test sets used to evaluate disambiguation tasks are representative for the full complexity considering this time-anchored relation, resulting in semantic overfitting to a specific period and the frequent phenomena within.

We conceptualize and formalize a set of metrics which evaluate this complexity of datasets. We provide evidence for their applicability on five different disambiguation tasks. Finally, we propose a time-based, metric-aware method for developing datasets in a systematic and semi-automated manner.

More is not always better: balancing sense distributions for all-words Word Sense Disambiguation by Marten Postma, Ruben Izquierdo and Piek Vossen

Abstract

Current Word Sense Disambiguation systems show an extremely low performance on low frequent senses, which is mainly caused by the difference in sense distributions between training and test data. The main focus in tackling this problem has been on acquiring more data or selecting a single predominant sense and not necessarily on the meta properties of the data itself. We demonstrate that these properties, such as the volume, provenance and balancing, play an important role with respect to system performance. In this paper, we describe a set of experiments to analyze these meta properties in the framework of a state-of-the-art WSD system when evaluated on the SemEval-2013 English all-words dataset. We show that volume and provenance are indeed important, but that perfect balancing of the selected training data leads to an improvement of 21 points and exceeds state-of-the-art systems by 14 points while using only simple features. We therefore conclude that unsupervised acquisition of training data should be guided by strategies aimed at matching meta-properties.

LREC2016

CLTL papers, oral presentations, poster & demo sessions at LREC2016: 10th edition of the Language Resources and Evaluation Conference, 23-28 May 2016, Portorož (Slovenia)

Monday 23 May 2016

11.00 – 11.45 (Session 2: Lightning talks part II)

“Multilingual Event Detection using the NewsReader Pipelines”, by Agerri R, I. Aldabe, E. Laparra, G. Rigau, A. Fokkens, P. Huijgen, R. Izquierdo, M. van Erp, Piek Vossen, A. Minard, B. Magnini

Abstract

We describe a novel modular system for cross-lingual event extraction for English, Spanish,, Dutch and Italian texts. The system consists of a ready-to-use modular set of advanced multilingual Natural Language Processing (NLP) tools. The pipeline integrates modules for basic NLP processing as well as more advanced tasks such as cross-lingual Named Entity Linking, Semantic Role Labeling and time normalization. Thus, our cross-lingual framework allows for the interoperable semantic interpretation of events, participants, locations and time, as well as the relations between them.

Tuesday 24 May 2016

09:15 – 10:30 Oral Session 1

“Stereotyping and Bias in the Flickr30k Dataset” by Emiel van Miltenburg

Abstract

An untested assumption behind the crowdsourced descriptions of the images in the Flickr30k dataset (Young et al., 2014) is that they “focus only on the information that can be obtained from the image alone” (Hodosh et al., 2013, p. 859). This paper presents some evidence against this assumption, and provides a list of biases and unwarranted inferences that can be found in the Flickr30k dataset. Finally, it considers methods to find examples of these, and discusses how we should deal with stereotypedriven descriptions in future applications.

Day 1, Wednesday 25 May 2016

11:35 – 13:15 Area 1 – P04 Information Extraction and Retrieval

“NLP and public engagement: The case of the Italian School Reform” by Tommaso Caselli, Giovanni Moretti, Rachele Sprugnoli, Sara Tonelli, Damien Lanfrey and Donatella Solda Kutzman

Abstract

In this paper we present PIERINO (PIattaforma per l’Estrazione e il Recupero di INformazione Online), a system that was implemented in collaboration with the Italian Ministry of Education, University and Research to analyse the citizens’ comments given in #labuonascuola survey. The platform includes various levels of automatic analysis such as key-concept extraction and word co-occurrences. Each analysis is displayed through an intuitive view using different types of visualizations, for example radar charts and sunburst. PIERINO was effectively used to support shaping the last Italian school reform, proving the potential of NLP in the context of policy making.

15:05 – 16:05 Emerald 2 – O8 Named Entity Recognition

“Context-enhanced Adaptive Entity Linking” by Giuseppe Rizzo, Filip Ilievski, Marieke van Erp, Julien Plu and Raphael Troncy

Abstract

More and more knowledge bases are publicly available as linked data. Since these knowledge bases contain structured descriptions of real-world entities, they can be exploited by entity linking systems that anchor entity mentions from text to the most relevant resources describing those entities. In this paper, we investigate adaptation of the entity linking task using contextual knowledge. The key intuition is that entity linking can be customized depending on the textual content, as well as on the application that would make use of the extracted information. We present an adaptive approach that relies on contextual knowledge from text to enhance the performance of ADEL, a hybrid linguistic and graph-based entity linking system. We evaluate our approach on a domain-specific corpus consisting of annotated WikiNews articles.

16:45 – 18:05 – Area 1 – P12

“GRaSP: A multi-layered annotation scheme for perspectives” by Chantal van Son, Tommaso Caselli, Antske Fokkens, Isa Maks, Roser Morante, Lora Aroyo and Piek Vossen

Abstract / Poster

This paper presents a framework and methodology for the annotation of perspectives in text. In the last decade, different aspects of linguistic encoding of perspectives have been targeted as separated phenomena through different annotation initiatives. We propose an annotation scheme that integrates these different phenomena. We use a multilayered annotation approach, splitting the annotation of different aspects of perspectives into small subsequent subtasks in order to reduce the complexity of the task and to better monitor interactions between layers. Currently, we have included four layers of perspective annotation: events, attribution, factuality and opinion. The annotations are integrated in a formal model called GRaSP, which provides the means to represent instances (e.g. events, entities) and propositions in the (real or assumed) world in relation to their mentions in text. Then, the relation between the source and target of a perspective is characterized by means of perspective annotations. This enables us to place alternative perspectives on the same entity, event or proposition next to each other.

18:10 – 19:10 – Area 2 – P16 Ontologies

“The Event and Implied Situation Ontology: Application and Evaluation” by Roxane Segers, Marco Rospocher, Piek Vossen, Egoitz Laparra, German Rigau, Anne-Lyse Minard

Abstract / Poster

This paper presents the Event and Implied Situation Ontology (ESO), a manually constructed resource which formalizes the pre and post situations of events and the roles of the entities affected by an event. The ontology is built on top of existing resources such as WordNet, SUMO and FrameNet. The ontology is injected to the Predicate Matrix, a resource that integrates predicate and role information from amongst others FrameNet, VerbNet, PropBank, NomBank and WordNet. We illustrate how these resources are used on large document collections to detect information that otherwise would have remained implicit. The ontology is evaluated on two aspects: recall and precision based on a manually annotated corpus and secondly, on the quality of the knowledge inferred by the situation assertions in the ontology. Evaluation results on the quality of the system show that 50% of the events typed and enriched with ESO assertions are correct.

Day 2, Thursday 26 May 2016

10.25 – 10.45 – O20

“Addressing the MFS bias in WSD systems” by Marten Postma, Ruben Izquierdo, Eneko Agirre, German Rigau and Piek Vossen

Abstract

This paper presents a framework and methodology for the annotation of perspectives in text. In the last decade, different aspects of linguistic encoding of perspectives have been targeted as separated phenomena through different annotation initiatives. We propose an annotation scheme that integrates these different phenomena. We use a multilayered annotation approach, splitting the annotation of different aspects of perspectives into small subsequent subtasks in order to reduce the complexity of the task and to better monitor interactions between layers. Currently, we have included four layers of perspective annotation: events, attribution, factuality and opinion. The annotations are integrated in a formal model called GRaSP, which provides the means to represent instances (e.g. events, entities) and propositions in the (real or assumed) world in relation to their mentions in text. Then, the relation between the source and target of a perspective is characterized by means of perspective annotations. This enables us to place alternative perspectives on the same entity, event or proposition next to each other.

11.45 – 13.05 – Area 2 – P25

“The VU Sound Corpus: Adding more fine-grained annotations to the Freesound database” by Emiel van Miltenburg, Benjamin Timmermans and Lora Aroyo

Day 3, Friday 27 May 2016

10.45 – 11.05 – O38

“Temporal Information Annotation: Crowd vs. Experts” by Tommaso Caselli, Rachele Sprugnoli and Oana Inel

Abstract

This paper describes two sets of crowdsourcing experiments on temporal information annotation conducted on two languages, i.e., English and Italian. The first experiment, launched on the CrowdFlower platform, was aimed at classifying temporal relations given target entities. The second one, relying on the CrowdTruth metric, consisted in two subtasks: one devoted to the recognition of events and temporal expressions and one to the detection and classification of temporal relations. The outcomes of the experiments suggest a valuable use of crowdsourcing annotations also for a complex task like Temporal Processing.

12.45 – 13.05 – O42

“Crowdsourcing Salient Information from News and Tweets” by Oana Inel, Tommaso Caselli and Lora Aroyo

Abstract

The increasing streams of information pose challenges to both humans and machines. On the one hand, humans need to identify relevant information and consume only the information that lies at their interests. On the other hand, machines need to understand the information that is published in online data streams and generate concise and meaningful overviews. We consider events as prime factors to query for information and generate meaningful context. The focus of this paper is to acquire empirical insights for identifying salience features in tweets and news about a target event, i.e., the event of “whaling”. We first derive a methodology to identify such features by building up a knowledge space of the event enriched with relevant phrases, sentiments and ranked by their novelty. We applied this methodology on tweets and we have performed preliminary work towards adapting it to news articles. Our results show that crowdsourcing text relevance, sentiments and novelty (1) can be a main step in identifying salient information, and (2) provides a deeper and more precise understanding of the data at hand compared to state-of-the-art approaches.

14:55 – 16:15 – Area 2- P54

“Two architectures for parallel processing for huge amounts of text” by Mathijs Kattenberg, Zuhaitz Beloki, Aitor Soroa, Xabier Artola, Antske Fokkens, Paul Huygen and Kees Verstoep

Abstract

This paper presents two alternative NLP architectures to analyze massive amounts of documents, using parallel processing. The two architectures focus on different processing scenarios, namely batch-processing and streaming processing. The batch-processing scenario aims at optimizing the overall throughput of the system, i.e., minimizing the overall time spent on processing all documents. The streaming architecture aims to minimize the time to process real-time incoming documents

and is therefore especially suitable for live feeds. The paper presents experiments with both architectures, and reports the overall gain when they are used for batch as well as for streaming processing. All the software described in the paper is publicly available under free licenses.

14:55 – 15:15 Emerald 1 – O47

“Evaluating Entity Linking: An Analysis of Current Benchmark Datasets and a Roadmap for Doing a Better Job” by Marieke van Erp, Pablo Mendes, Heiko Paulheim, Filip Ilievski, Julien Plu, Giuseppe Rizzo and Joerg Waitelonis

Abstract

Entity linking has become a popular task in both natural language processing and semantic web communities. However, we find that the benchmark datasets for entity linking tasks do not accurately evaluate entity linking systems. In this paper, we aim to chart the strengths and weaknesses of current benchmark datasets and sketch a roadmap for the community to devise better benchmark datasets.

15.35 – 15.55 – O48

“MEANTIME, the NewsReader Multilingual Event and Time Corpus” by Anne-Lyse Minard, Manuela Speranza, Ruben Urizar, Begoña Altuna, Marieke van Erp, Anneleen Schoen and Chantal van Son

Abstract

In this paper, we present the NewsReader MEANTIME corpus, a semantically annotated corpus of Wikinews articles. The corpus consists of 480 news articles, i.e. 120 English news articles and their translations in Spanish, Italian, and Dutch. MEANTIME contains annotations at different levels. The document-level annotation includes markables (e.g. entity mentions, event mentions, time expressions, and numerical expressions), relations between markables (modeling, for example, temporal information and semantic role labeling), and entity and event intra-document coreference. The corpus-level annotation includes entity and event cross-document coreference. Semantic annotation on the English section was performed manually; for the annotation in Italian, Spanish, and (partially) Dutch, a procedure was devised to automatically project the annotations on the English texts onto the translated texts, based on the manual alignment of the annotated elements; this enabled us not only to speed up the annotation process but also provided cross-lingual coreference. The English section of the corpus was extended with timeline annotations for the SemEval 2015 TimeLine shared task. The First CLIN Dutch Shared Task at CLIN26 was based on the Dutch section, while the EVALITA 2016 FactA (Event Factuality Annotation) shared task, based on the Italian section, is currently being organized.

LREC2016 Accepted Papers

CLTL has 11 accepted papers at LREC2016. We’ll see you in Portorož in May!

ORAL PRESENTATIONS

“Evaluating Entity Linking: An Analysis of Current Benchmark Datasets and a Roadmap for Doing a Better Job ” by Marieke van Erp, Pablo Mendes, Heiko Paulheim, Filip Ilievski, Julien Plu, Giuseppe Rizzo and Joerg Waitelonis

“Context-enhanced Adaptive Entity Linking” by Giuseppe Rizzo, Filip Ilievski, Marieke van Erp, Julien Plu and Raphael Troncy

“MEANTIME, the NewsReader Multilingual Event and Time Corpus” by Anne-Lyse Minard, Manuela Speranza, Ruben Urizar, Begoña Altuna, Marieke van Erp, Anneleen Schoen and Chantal van Son

“Crowdsourcing Salient Information from News and Tweets” by Oana Inel, Tommaso Caselli and Lora Aroyo

“Temporal Information Annotation: Crowd vs. Experts” by Tommaso Caselli, Rachele Sprugnoli and Oana Inel

“Addressing the MFS bias in WSD systems” by Marten Postma, Ruben Izquierdo, Eneko Agirre, German Rigau and Piek Vossen

POSTER/DEMO PRESENTATIONS

“A multi-layered annotation scheme for perspectives” by Chantal van Son, Tommaso Caselli, Antske Fokkens, Isa Maks, Roser Morante, Lora Aroyo and Piek Vossen

“The VU Sound Corpus: Adding more fine-grained annotations to the Freesound database” by Emiel van Miltenburg, Benjamin Timmermans and Lora Aroyo

“NLP and public engagement: The case of the Italian School Reform” by Tommaso Caselli, Giovanni Moretti, Rachele Sprugnoli, Sara Tonelli, Damien Lanfrey and Donatella Solda Kutzman

“Two architectures for parallel processing for huge amounts of text” by Mathijs Kattenberg, Zuhaitz Beloki, Aitor Soroa, Xabier Artola, Antske Fokkens, Paul Huygen and Kees Verstoep

“The Event and Implied Situation Ontology: Application and Evaluation” by Roxane Segers, Marco Rospocher, Piek Vossen, Egoitz Laparra, German Rigau, Anne-Lyse Minard

Round table on ‘Time and Language’: Oct. 30, 2014

Event date:

Thursday, 30 October, 2014 – 18:30 to 20:00

The round table “Time and Language”, organized by Tommaso Caselli (VUA, Amsterdam) and Rachele Sprugnoli (DH-FBK), will be held in Genoa on Thursday October 30 as part of “Festival della Scienza “.

Time is a pervasive element of human life that is also reflected in the language. But how time is encoded in the various languages of the world? How long is an event? What happens if we want to teach a computer to reconstruct the temporal order of events in a text? The philosopher of language Andrea Bonomi, the linguist Pier Marco Bertinetto and the computational linguist Bernardo Magnini will answer these questions to reveal to the public the role that time has in the language and the challenges of technology in this field. Philosophy, linguistics and technology come together and introduce the public to the fascinating relationship between time and language.

1st VU-Spinoza workshop: Oct. 17, 2014

Understanding

of

language

by

machines

– an escape from the world of language –

Spinoza Prize projects (2014-2019)

Prof. dr. Piek Vossen

Understanding language by machines

1st VU-Spinoza workshop

Friday, October 17, 2014 from 12:30 PM to 6:00 PM (CEST)

Atrium, room D-146, VU Medical Faculty (1st floor, D-wing)

Van der Boechorststraat 7

1081 BT Amsterdam

Please RSVP via Eventbrite before October 03, 2014

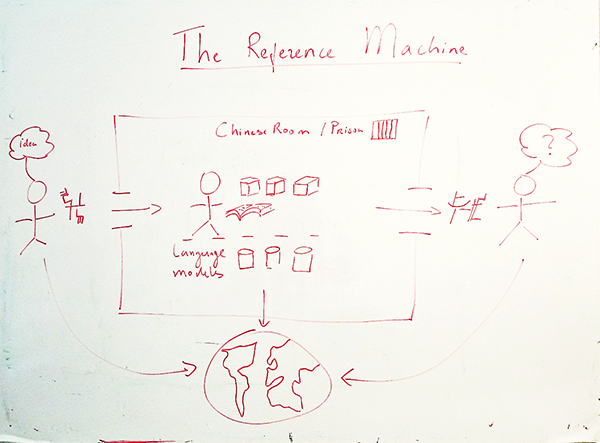

Can machines understand language? According to John Searle, this is fundamentally impossible. He used the Chinese Room thought-experiment to demonstrate that computers follow instructions to manipulate symbols without understanding of these symbols. William van Orman Quine even questioned the understanding of language by humans, since symbols are only grounded through approximation by cultural situational convention. Between these extreme points of views, we are nevertheless communicating every day as part of our social behavior (within Heidegger’s hermeneutic circle), while more and more computers and even robots take part in communication and social interactions.

The goal of the Spinoza project “Understanding of language by machines” (ULM) is to scratch the surface of this dilemma by developing computer models that can assign deeper meaning to language that approximates human understanding and to use these models to automatically read and understand text. We are building a Reference Machine: a machine that can map natural language to the extra- linguistic world as we perceive it and represent it in our brain.

This is the first in a series of workshops that we will organize in the Spinoza project to discuss and work on these issues. It marks the kick-off of 4 projects that started in 2014, each studying different aspects of understanding and modeling this through novel computer programs. Every 6-months, we will organize a workshop or event that will bring together different research lines to this central theme and on a shared data sets.

We investigate ambiguity, variation and vagueness of language; the relation between language, perception and the brain; the role of the world view of the writer of a text and the role of the world view and background knowledge of the reader of a text.

▬▬▬▬▬▬▬▬▬▬▬▬▬▬ Program ▬▬▬▬▬▬▬▬▬▬▬▬▬▬

12:30 – 13:00 Welcome

13:00 – 13:30 Understanding language by machines: Piek Vossen

13:30 – 14:30 Borders of ambiguity: Marten Postma and Ruben Izquierdo

14:30 – 15:00 Word, concept, perception and brain: Emiel van Miltenburg and Alessandro Lopopolo

15:00 – 15:15 Coffee break

15:15 – 15:45 Stories and world views as a key to understanding: Tommaso Caselli and Roser Morante

15:45 – 16:15 A quantum model of text understanding: Minh Lê Ngọc and Filip Ilievski

16:15 – 17:00 Discussion on building a shared demonstrator: a reference machine

17:00 – 18:00 Drinks

For more information on the project see Understanding of Language by Machines.

▬▬▬▬▬▬▬▬▬▬▬▬▬▬ RSVP ▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

Admission is free. Please RSVP via Eventbrite before October 03, 2014.

▬▬▬▬▬▬▬▬▬▬▬▬▬▬ Location ▬▬▬▬▬▬▬▬▬▬▬▬▬▬

Atrium, room D-146, VU Medical Faculty (1st floor, D-wing)

Van der Boechorststraat 7

1081 BT Amsterdam

The Netherlands

Parking info N.B. Campus parking is temporarily unavailable.